AI in Healthcare: How LLMs Are Transforming Medical Diagnosis and Ethics

Currently, artificial intelligence models have a significant role in the healthcare sector, primarily by assisting in diagnostics and understanding medical problems. Additionally, it is of great importance in other areas, most notably at organizational levels, where it proves particularly useful during the screening process. It coordinates AI chatbots and collects patient history and physical signs to understand priorities, can personalize medical care, and finally assist in hospital management.

AI in Healthcare: Large Language Models in Medical Diagnostics

With it, the usage of language models, which don’t even need to be the large ones, is a legit potentializer of human skills and abilities. By incorporating AI into well-established and documented processes, it can take on tasks that would require several analysts and perform its job with perfection, being much quicker and sometimes even understanding contexts better than humans. Additionally, it is continually improving towards maximum efficiency in diagnostics, the discovery of new medicines and treatments, and real-time decision support. It already is of immense value in the healthcare area, and the importance of artificial intelligence will surely be better understood as time passes.

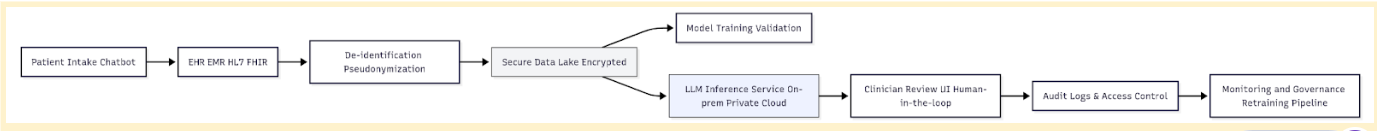

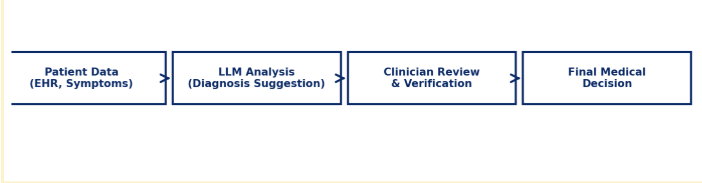

To help you better visualize how AI and Large Language Models integrate into custom healthcare software day-to-day routines, here’s a diagram to represent a high-level architecture. It highlights data flow, privacy measures, and human oversight.

This architecture explains how patient information is handled by the systems, traveling through a secure, privacy-focused pipeline before interacting with the AI system. First, data is gathered from the patient’s history and previous interactions, and joined with new information about their current condition. Then, it is anonymized, and the data is handed to the language model to support diagnostic reasoning, summarize clinical findings, or assist in treatment planning. The two most important characteristics of this process are the de-identification and the complete automation; the patient’s info isn’t leaked, and there’s no need for external assistance in the pipeline.

Understanding Large Language Models in a Medical Context

Modern artificial intelligence applications, nowadays, function within what is called a large language model, or LLM for short. These are computer programs that can understand natural language and generate text after “reading” huge chunks of information — such as books, scientific magazines, websites, and medical pamphlets. The amount of data they’re trained on determines the number of tokens (i.e., chunks of information) in their database, hence why some are called large models, others are small, etc. They specialized in understanding contexts and patterns between data, and, with their training, they can read a word and determine what is probably the next correct word in the sentence.

That’s the basis for how modern artificial intelligence works; basically, through recognition of language patterns and prediction of the next word in the sentence. It has proven to be extremely powerful when the model specializes in a specific area of knowledge, receiving special training data, resulting in more compact models with surgically precise results in predictions. It is, then, of immense value to an area such as that of AI in healthcare, which requires context understanding, theoretical knowledge, and critical thinking. In these situations, artificial intelligence shines best — it only needs to understand the current prompt, compare it to its dataset, and predict what the response is, correlating a new situation to previous ones, leading it to diagnose at blazing fast speeds.

Therefore, diagnosis is one of the most important applications of LLMs in the healthcare area. Artificial intelligence can already analyze an image of an exam and determine whether the patient has cancer, if it is in its early stages, and how to treat it, with more accuracy than most human doctors. Still, there are other uses, such as creating summaries of medical situations, answering clinical questions, understanding medical records, and communicating with patients. However, it is essential to note that AI models can never fully replace well-educated human doctors. The computer will, from time to time, hallucinate, deliver nonsensical responses, fabricate new information, and misdiagnose whenever it encounters situations it has never seen before.

Key Benefits of LLM-Assisted Diagnostics

As cited, implementing language models in the AI healthcare industry brings several competitive advantages and can improve customer experience through lower response times, more accurate diagnostics, among others. This will lead to faster hospital screening and anamnesis processes, which will greatly help both physicians and patients with faster response times and more accurate diagnostics. It is most useful in remote regions, where there are not many doctors available; it can save a lot of time in patient care services and potentially even save lives.

Improved Diagnostic Accuracy

Several scientific studies have already demonstrated the power of artificial intelligence agents when integrated into the diagnosis and patient care processes. For instance, this study, which was published in Science magazine, shows that AI tools can get a diagnostic accuracy rate of 0,986, which means that it is almost perfect. This other study, published in JAMA Internal Medicine, shows that even ChatGPT-4, which is by no means a specialized model, can even surpass assistant doctors, displaying a surprising clinical thinking. As we can understand, both experience and scientific studies show that there are several advantages to employing artificial intelligence models in AI healthcare processes, while holding very few downsides.

This chart shows how the diagnostic process works: first of all, the patient data is gathered from the current situation, patient history, and personal information. Then, it is analyzed by the LLM, with specific prompting engineering. After the processing, the clinician reviews and verifies whether or not it makes sense, and then the final medical decision can be taken. The final decision must be taken by an actual physician, or at least by an actual human: an Artificial Intelligence agent should never be blindly trusted and followed, as it will very often hallucinate and completely invent something new.

Time and Cost Efficiency

Employing artificial intelligence agents in patient care offers a significant return on investment due to the substantial improvements it can bring to the experience and speed of service in the healthcare area. This can help save lives, as hospital screenings and anamnesis processes are made much faster. With this, the patient also benefits from receiving a more trustworthy diagnosis and being sent to receive the best treatment. Lastly, there will be fewer hospital expenses, as everything’s done much more efficiently and with a smaller margin of errors; it’s a win-win situation that offers no downsides.

Real-World Use Cases and Emerging Solutions

There are already several successful use cases for artificial intelligence solutions in the AI healthcare market. For instance, Brazilian Hospital Siro-Libanês has developed an application utilizing a proprietary, locally trained large language model called Sofya. It is a complete solution to assist physicians in diagnosing and also in organizing their cases and patients; to sum it up, it “acts as a medical second brain that reduces digital bureaucracy, structures data, and elevates the standard of care”, as they describe it. It is said to reduce bureaucracy by 30%, having optimized more than 500.000 medical appointments with 94% satisfaction among doctors and health administrators.

Additionally, Subtle Medical has developed SubtleHD, a powerful tool that enables the rapid and accurate reading of brain MRIs. It improves image quality on regular and accelerated image protocols, allowing radiologists to expedite patient care by reducing image noise for the entire body, eliminating manual work for the doctor. Their suite of AI tools includes other applications, such as SubtlePET, which improves images of MR in animals, etc.

And lastly, another great successful case of an AI-driven healthcare company is HiLab. They’re an applied proof of how artificial intelligence can assist in the diagnosis and patient care process. They use artificial intelligence to process health data and deliver real-time insights, providing swift results and saving time and resources. To do so, they use four different devices, with the smallest one delivering results in as little as 8 minutes for simple tests and delivering molecular-level results in up to one hour, employing a methodology analogous to RT-PCR.

Ethical Challenges and Regulatory Considerations

Even though there are many positive sides to ostensibly employing artificial intelligence in your care services, it also has some downsides and ethical implications. First and foremost, data privacy is always the biggest concern when large language models are involved. To be trained, they require terabytes of data — not fake, synthetic data, but actual, organic data. Furthermore, due to the model being restricted to its training data, it sometimes fails when faced with new situations, such as minority groups or new diseases. And, finally, even though artificial intelligence can lead to very good guesses, it’s all guesses at the end of the day, which means that an actual physician still has to understand and finally diagnose.

Data Privacy and Patient Consent

One of the biggest problems involving every single artificial intelligence tool is how dependent it is on real, organic data from actual users. And, with regards to healthcare AI applications, medical patients need to have their cases, diseases, diagnostics, and other relevant information used by the AI company to train the model. Although this information is anonymized, it can still be traced back to the patient, and very frequently, they aren’t even aware that their data will be used to feed a model, hence they don’t give explicit consent for it to happen. Needless to say, that’s a big deal and can lead to the leak of very personal information about someone who didn’t even know that third parties were reading their data. Therefore, it is crucial to develop large language models ethically, clearly informing users about how their personal information is being used and obtaining their consent to participate in the training process.

Here’s a simple example of Python web development code to anonymize patient data that can be implemented into your data processing system:

clinical_context = """

Patient: Female, 54

Symptoms: persistent cough, night sweats, fatigue

History: smoker for 20 years, no recent travel

Tests: chest X-ray indicates upper lobe opacity

"""

prompt = f"""

You are a medical diagnostics assistant.

Analyze the following case and propose 3 differential diagnoses.

Only suggest conditions supported by the data.

Do not make definitive conclusions.

Return output in a structured format.

Case:

{clinical_context}

"""

response = llm.generate(prompt)

print(response)Bias and Fairness in AI Models

Furthermore, large language models operate by utilizing the information they already know, comparing it to the user’s input, and then generating a response. If, for any reason, a model doesn’t know anything about a new context, type of input, or really anything, then it will make up a nonsensical response, called hallucinations. Amongst the problems with hallucinations, the biggest is perhaps just how confidently the model says what it says: it will hallucinate, and do so while seeming to be 100% sure about what it says. It can lead to huge mistakes if whoever interprets the response from the model doesn’t take the time to judge if it even makes sense. In the healthcare sector, it can be the difference between life and death, as a misdiagnosis can have severe consequences for a patient’s health. So, even though the AI is reliable, the human mind is still needed, actually, to interpret and understand responses.

Best Practices for AI Healthcare Providers and Tech Teams

To properly implement artificial intelligence in healthcare responsibly and safely, some steps must be taken. Remember that the focus is always on offering the best service for the patients, and they must be cared for in every possible way, most of all when they’re under care in very sensitive situations. As cited, user privacy must be taken into consideration more than anything else, as it can leak very personal information about illnesses and medical conditions, and that is extremely dangerous. So, to help you implement artificial intelligence into your medical services, we’ve elaborated this little guide on how to care for the integrity and well-functioning of such a modern tooling system:

- Build a multidisciplinary implementation team: The usage of artificial intelligence in such a sensitive area also offers some drawbacks and might even be dangerous from some viewpoints. So, the AI tools must be very well-planned and well-executed. To do so, you must create a task force that includes clinicians, data scientists, legal advisors, cybersecurity specialists, and ethics consultants. With it, each professional’s point of view will complement the others, and thus, they can ensure the new system is implemented responsibly.

- Design with privacy by default: As previously discussed, patient confidentiality must be treated as a non-negotiable requirement from day one. Patient data should never be individually identifiable, always untraceable, and implement strict access control; it should also be encrypted whenever possible. If you plan to train models in your hospital, consider implementing federated learning to ensure the data remains within the hospital’s secure environment.

- Keep a human in the loop: As automated and smart as artificial intelligence might be, it can never be left alone. Its conclusions and decisions must never be taken into consideration blindly; everything must be thoroughly reviewed and understood by qualified personnel. This implies that there must always be a human in the loop to do the proper clinical judgment, reinforcing that technology is merely a tool, a means to an end, and a human mind must properly guide it.

As a matter of fact, one of the best uses of AI is to assist in decision-making. LLM clients can easily access databases, process the extracted data, and summarize, for instance, a patient history into simple bullet points. Here’s an example of how to do it with Python pseudocode:

from hypothetical_llm_client import LLMClient

llm = LLMClient(api_key="YOUR_KEY", model="clinical-llm-v1")

clinical_note = """

Patient: Female, 67y. Presents with progressive dyspnea, fever, and cough for 5 days.

Chest X-ray shows bilateral infiltrates. Elevated CRP. History of COPD.

"""

prompt = f"Summarize this clinical note in 3 bullet points and list top differential diagnoses:\n\n{clinical_note}"

response = llm.generate(prompt, max_tokens=200, temperature=0.0)

print(response.text)- Establish continuous governance and auditing: There must always be auditing iterations in your application, and it must be very strictly audited regularly. In doing so, you can ensure that your results are good, gather statistics on usage and misdiagnosis, and demonstrate compliance with regulations like HIPAA, GDPR, or your country’s equivalent. It is also important to keep the AI tool up to date and ensure that it remains safe and properly working. Some models tend to deteriorate with usage and iterations, and they might need to be updated or retrained.

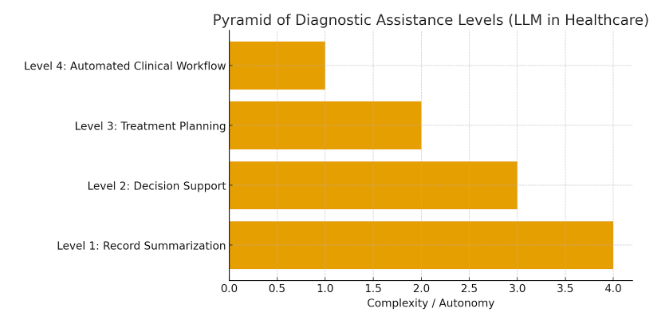

- Start small: Implementing a large language model can sometimes be a huge leap to take at once, especially in the implementation process. Therefore, you should start small, whether using the application only for simpler diagnoses and clinical cases or even dedicating it to a specific subset of patients. Every single step in the use of artificial intelligence applications in healthcare is crucial. It must be taken carefully, so it’s essential to ensure everything is working perfectly before final implementation. For instance, there are steps and degrees as to how deeply the artificial intelligence process is implemented into a hospital. The first step isn’t even AI-related at all; so, the implementation is gradual and should be done step-by-step, and properly respect the pace of the staff and the patients. The steps are as follows:

Conclusion: Balancing Innovation and Ethics in AI Healthcare

In conclusion, it is essential to recognize that LLMs are already a solid reality in several high-tech hospitals, enabling blazing-fast and surprisingly accurate diagnoses. For instance, AI apps focused on image comprehension can detect cancer and other illnesses at extremely early stages and with astonishing precision, surpassing the capabilities of any human doctor. This will lead to greater clinical agility, accuracy, and aid doctors in situations of stress and when quick processing is needed the most. Additionally, it will democratize access to cutting-edge health services, as the large language model is always informed with the latest studies, while also being able to search the internet for unknown information.

But artificial intelligence must be implemented and used with responsibility and true care for the patient experience. It must be done ethically, respecting privacy and caring to not do any legal harm to the patient, physicians, and other users, and also to not put anyone’s data at stake due to the high risks of data breaches to third parties. As some LLMs are maintained by external companies, they can collect information about your patients and doctors and use it to further train the model, so you must weigh things down and understand which one is the best way to follow. And lastly, the AI app must be thoroughly examined and guided, following strict regulation rules and abiding by precepts to avoid the risks of using it, such as biases, misdiagnoses, among others.

So, to extract everything that artificial intelligence has to offer in terms of advantages for the healthcare sector, it must be implemented according to some patterns and rules. It must be done responsibly, ensuring that the application works respectfully and securely, and is actually caring for hospitals, patients, and doctors. To do so, hospitals, startups, and professionals must work together to adopt AI ML development solutions with governance, transparency, and with the patient as the focal point. Not only is the future promising for this area, but the present is already exciting — artificial intelligence tools have already revolutionized the healthcare area, and the prospect for the future is bright. It will help detect illnesses from very early stages, and in non-invasive ways too: sometimes, they can detect from mere pictures or X-rays, potentially saving lives with early diagnoses. So, if you decide to invest in this new technology and take the time to do it properly, surely your hospital and your future patients will take great advantage of the modernization!