Why Your Company Should – or Shouldn’t – Use Kubernetes

Kubernetes is one of the most discussed tools used for server administration. But how and when should you use it? Find out here!

In the modern software development process, it is now commonplace to containerize new applications. A container creates a separate space within your computer, installing only what is needed to run an application and start it. So, if a project runs on a computer, the container will make sure that any computer can run it – given that it has the required processing capabilities. By doing so, you can create a microenvironment just for your app, making sure that it runs anywhere, thus reducing the risk of unpredicted errors.

But, as applications grow more and more complex and divide into several microservices, it becomes hard to orchestrate all of those different containers. Some companies have hundreds of containers running simultaneously, and they all receive different levels of usage and demands, and that needs to be orchestrated too. In this context, Kubernetes (K8S for short) emerges as the top solution to sustain and coordinate several containers simultaneously. It can treat failures and errors, and replicate containers that are under bigger usage and stress levels. In this article, we will delve a little bit into how this very important tool works and the several different usages it can have, boosting your company’s services’ performance and resilience.

Table of Content

- What is Kubernetes

- Success Cases in the Market

- Why and When Use Kubernetes

- How to Start Using Kubernetes

- Conclusion

What is Kubernetes

Kubernetes is a Free Open-Source Software (FOSS) created originally by Google in 2014, and since 2015 it has been maintained by the Cloud Native Computing Foundation (CNCF). It is a tool used to maintain and orchestrate several different containers, granting failure proof and more efficiency. It can also auto-scale node sizes, execute several operational tasks, and reduce management complexity by organizing multiple containers at once and automatically.

Its basic structure is centered around pods, nodes, and clusters, which are directed by a control plane. Giving a quick view of all of it, a pod is the smallest executable part of the server, as it encapsulates one or more containers. Even though a pod can execute more than one container, that’s very rarely the case and is only used if the containers need each other to function properly.

A node, on the other hand, is a processing machine, which can be either virtual or bare metal, and it runs the pods with Kubelet, Kube-proxy, and with the aid of the container runtime. Lastly, a cluster contains different nodes, abstracting individual machines and allowing the distributed execution of the workloads.

It has two main elements: the control plan and the worker nodes. The control plan is a YAML or JSON document that describes the desired state of the cluster, including which pods must be executed and where. It is the key to one of K8S’s greatest advantages: it is vendor-agnostic, that is, it can run on any server and be moved from one to another easily, due to this YAML plan. Lastly, worker nodes are the units responsible for executing the real workloads.

This way, Kubernetes offers a well-defined hierarchy within which the server will be executed. Its organization around clusters, nodes, and pods is crucial for its autoscaling capability, as the pods are automatically replicated in case of high demands. Also, with the replication process, Kubernetes runs a load balancer and, in case any of these pods fail, they are automatically recreated by the Controller Manager.

Success Cases in the Market

As Kubernetes is already a default system to be used by companies with big products used by thousands, even millions, of users, it has many success cases in the market. It is now almost unthinkable to have applications that deal with huge amounts of data not using it – companies such as Google, Spotify, AirBnB, and others.

For instance, as this research gathered by EarthWeb shows, 76% of global internet searches are made on Google, with a global average of around 6.3 billion of them happening per minute. That’s a massive amount of data, and now it even has to be processed by AI before handing out the final result of the research, adding more complexity to the infrastructure. So, their services must have a 100% uptime capability, as every single failure could lead to massive damage around the world. To serve this demand, their servers must be replicated and their containers must be parallelized to handle the demand, and that’s what Kubernetes does for them.

Regarding AirBnB, this study conducted by The Stack shows that, previously, their structure was divided into thousands of clusters divided between hundreds of nodes. However, reports from 2021 had shown that their expenses with cloud were growing faster than their revenue, so they set a goal to reduce their “cost per booked night”. That big infrastructure was administrated by hand, which was largely impractical, as sometimes they couldn’t manually change the size of the node, leading to unnecessary expenses. They, then, migrated their clusters to a Kubernetes environment, which autoscaled according to the demand. That alone reduced their cloud costs by 5%, also removing the overhead of having to manually manage the cluster size.

As for Spotify, this study released on Kubernetes’ blog shows that their adhesion to K8S was made slowly, even though they were early adopters of the technology. Their rollout plan took years to be completed, but, as K8S was implemented, its bin-packing and multi-tenancy capabilities improved CPU utilization on average two to three times. It also allowed for the faster creation of new services, letting them focus more on new features and performance upgrades to their product.

All of these examples show how important Kubernetes can become for reducing maintenance costs for cloud applications. Sometimes, it might reduce costs by thousands of dollars simply by automatically managing node sizes, and that’s for a start, as it has some much more advanced features to fine-tune it for your demands.

Why and When You Should Use Kubernetes

Given all of this, it is to be noted that not every application would benefit from using Kubernetes. It meets a highly specific use case, and it’s not worth using it on a simple application with very little demand for infrastructure. It would complicate a lot the functioning of a simple app, sometimes not being worth the cost and technical implications of implementing K8S. It is of most use for high-usage environments in which many nodes and clusters are used to attain a specific end.

So, given this, it is more recommendable to use Kubernetes if your company uses lots of microservices or if your cloud expenses are too high, at the very least. For instance, think of a SaaS in which, every once in a while, demand explodes and its servers might not be able to attend to it. This company should have the size of their clusters automatically managed, to not lose performance in the demand peak and not lose money when it is over. This is a very clear case in which Kubernetes is most surely a good choice, as AirBnB’s experience showed earlier in this article.

Also, another great advantage of using it is that you can keep your application vendor-agnostic. That means that, as Kubernetes is configured with a YAML file, you can quickly change your cloud provider to suit your needs and find the best prices. This way, you’re not stuck by vendor lock-in, giving you more independence and allowing you to always chase the best opportunities in the market. K8S also offers other advantages, such as automatizing complex operational tasks and automatic recovery in case of failure on your containers.

Certified engineers

Convenient rates

Fast start

Profitable conditions

Agreement with

EU company

English and German

speaking engineers

How to Start Using Kubernetes

First of all, you must be proficient with the usage of container technologies, such as Docker or Containerd. As the smallest part of Kubernetes, that is, the pod is centered around containers, you must know very well how to create them and get them to do what you want. Also, you must understand a bit about servers, how to administrate virtual and physical machines, and how the minimum infrastructure works. That’s basic for any server administration, and, even though Kubernetes abstracts a lot of the operational stuff, you still have to understand it to properly use this tool.

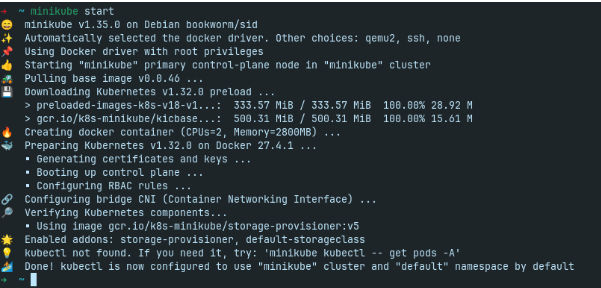

Now, given all of that, we will run a simple example of Kubernetes. It has some CLI tools for local testing, and we will use Minikube, as it’s the simplest one. And, to start doing so, we must be sure to have Docker, Minikube, kubectl, and KVM properly installed and working on your computer. Now there are a few quick steps on how to start an instance:

- Create a Minikube cluster with the command minikube start

It starts the service and allows you to use the kubectl commands, which is K8S’s service that runs on your machine. Here’s the expected output:

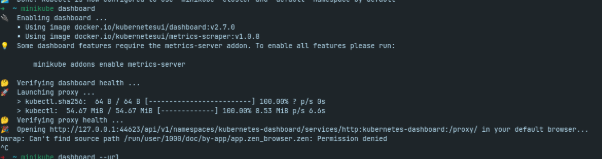

- Start the dashboard with the command minikube dashboard

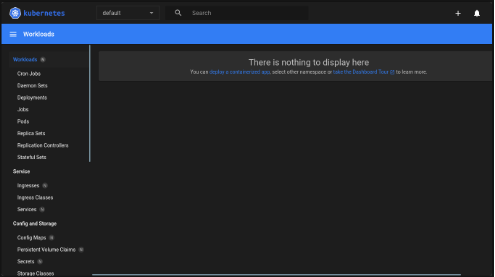

It will create a server in your computer for the management of the Kubernetes instance with a UI to make things easier. Here’s the console output and the interface:

In my case, as I use Zen Browser, it wasn’t recognized by the system. But I only had to hold Control and click on the URL (https://127.0.0.1:44623…) to open the page:

- Create an example deployment

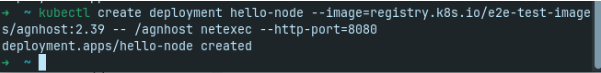

A deployment is a service that checks the health status of your Pods and restarts them in case of failure. For this example, we won’t create a deployment on the dashboard. We should, then, open a new terminal and run:

kubectl create deployment hello-node –image=registry.k8s.io/e2e-test-images/agnhost:2.39 — /agnhost netexec –http-port=8080

We should expect the following results:

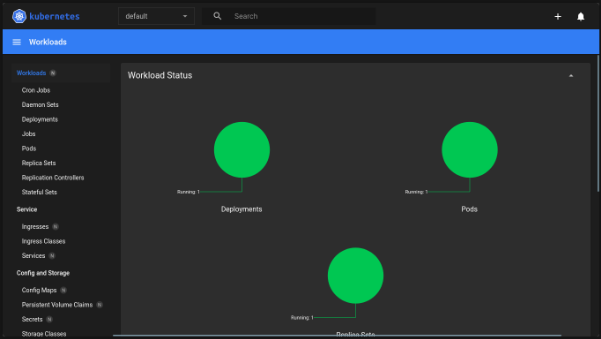

And now, in the dashboard, we can see the pod running in the dashboard:

If you want to dive a little further into how it works, you can check Kubernetes’ documentation. It is surprisingly well-written and has a simple and straight-forward website, so you should be able to learn everything that you might need.

Conclusion

Kubernetes is a very powerful tool that can save you tens of thousands of dollars on cloud expenses. It automates many critical tasks, such as autoscaling services capabilities, and granting recovery from failure, and it can also perform complex operational tasks on your servers. It is a very important technology that can quickly become the backbone of your cloud infrastructure, but at a cost: it adds a layer of complexity to your applications, and it does not always pay off. If your project is small, and most of all if it does not have that many users, then you probably should not use K8S.

And, if you think your application can fit into these requirements, you can contact us at Chudovo to help you implement Kubernetes in your server. We are a consultancy company with 10+ years of expertise in developing cloud servers, 8+ years managing DevOps operations, and more than 40 full-time experts to accompany you. We can help you implement this very powerful tool in your servers and become more resilient, and automatized, and even save thousands of dollars in cloud billings!